Futurology

For Robin Hanson history is important and interesting to study, but the future is also important – plausibly, more important, because we can actually do something about the future. He takes his varied background studying physics, philosophy, artificial intelligence and social science to the study of the potential future of brain emulations in his new book, "The Age of Em."

“What happens when a first-rate economist applies his rigor, breadth, and curiosity to the sci-fi topic of whole brain emulations?" asks Andrew McAfee, co-author of the Race Against the Machine. The answer for McAfee is Robin Hanson's new book, The Age of Em: Work, Love and Life when Robots Rule the Earth

“What happens when a first-rate economist applies his rigor, breadth, and curiosity to the sci-fi topic of whole brain emulations?" asks Andrew McAfee, co-author of the Race Against the Machine. The answer for McAfee is Robin Hanson's new book, The Age of Em: Work, Love and Life when Robots Rule the EarthHanson is an associate professor of economics at George Mason University, and a research associate at the Future of Humanity Institute of Oxford University. Hanson also has master’s degrees in physics and philosophy from the University of Chicago, nine years’ experience in artificial intelligence research at Lockheed and NASA, a doctorate in social science from California Institute of Technology. He has long been writing on his blog, Overcoming Bias, as well.

Related articles

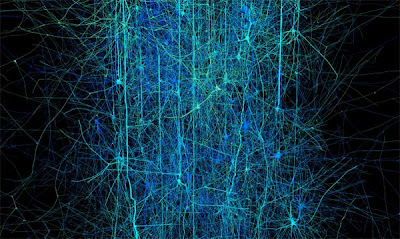

"The Age of Em" is Hanson's in-depth analysis of the idea that uploaded brains will be able to multiply exponentially, and will dramatically shape the future. Many think the first truly smart robots will be brain emulations or ems. Scan a human brain, then run a model with the same connections on a fast computer, and you have a robot brain, but recognizably human.Hanson defines an em as, the result of taking a particular human brain, scanning it to record its particular cell features and connections, and then building a computer model that processes signals according to those same features and connections. "A good enough em has close to the same overall input-output signal behavior as the original human. One might talk with it, and convince it to do useful jobs," he writes.

Train an em to do some job and copy it a million times: an army of workers is at your disposal. When they can be made cheaply, within perhaps a century, ems will displace humans in most jobs. Also, Hanson projects that ems will be able to run much faster than human speeds. In this new economic era, the world economy may double in size every few weeks.

A brain emulation, or “em,” results from taking a particular human brain, scanning it to record its particular cell features and connections, and then building a computer model that processes signals in the same way. Ems will probably be feasible within about a hundred years. They are psychologically

quite human, and could displace humans in most jobs. If fully utilized, ems could have a monumental impact on all areas of life on Earth.

"A good enough em has close to the same overall input-output signal behavior as the original human. One might talk with it, and convince it to do useful jobs."

The Age of EmHanson says, "like a circus side show, my book lets readers see something strange yet familiar in great detail, so they can gawk at what else changes and how when familiar things change. My book is a dwarf, sword swallower, and bearded lady, writ large."

Ambitious and encyclopedic in scope, The Age of Em