Once considered nothing more than science fiction, laser technology now dominates certain arenas of medicine.

Although it is somewhat ironic, the foundation for most surgical procedures is to create additional trauma before healing the trauma for which the patient first sought help. For example, to replace a joint damaged in an accident, the surgeon must cut through skin, muscle and cartilage to remove the damaged joint and replace it with a prosthetic. It was perhaps this paradox that led to the quest for a solution to conventional procedures and the eventual development of laser treatment. The latter is not as traumatic, invasive or painful as traditional surgical processes.

Understanding Laser Treatment

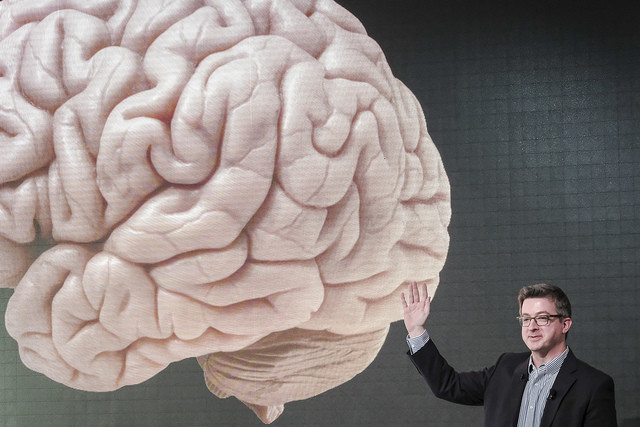

Laser therapy is the use of laser energy in a non invasive manner to create a photochemical response in dysfunctional or damaged tissues. This type of treatment can accelerate recovery from a broad range of chronic and acute conditions, as well as reduce inflammation and alleviate pain. According to rehabilitation therapists, the primary objective of treatment for most patients with debilitating, painful conditions is to improve mobility and function. Laser treatment is a surgery free, drug-free avenue through which to reach this goal. In addition, laser surgery has replaced conventional surgery in many areas of medicine.In 2013, neuroscientists from Harvard University working in conjunction with researchers from the University of Michigan have revealed in a published study the benefits and effectiveness of a cutting-edge, laser-based “scalpel” that relies on something called Raman scattering. This phenomenon uses light to identify brain tumors, which contrast with the cellular structure of the surrounding tissue. This is just one example of technological advancements that may revolutionize medical procedures in the future.

The Use of Lasers for Non-Catastrophic Illnesses

Advancements in laser technology are not limited to severe illnesses or major operations. In fact, the majority of society’s ailments are everyday struggles with manageable conditions–such as the regulation of insulin in diabetics–or poor vision and congenital baldness. The use of laser treatment has also led to one of the greatest advancements in eye surgery, Laser-Assisted in situ Keratomileusis, more commonly known as LASIK, which restores normal vision to nearsighted or farsighted individuals.Related articles

The Role of Laser Treatment in the Future

The future of Class IV Laser Treatment is seemingly very bright, even though certain advances are still years away. One such example is transparent skull implants, which researchers are currently studying. Scientists are attempting to determine whether a laser light can be shined into a person's brain in order to assist the surgeon to complete various procedures without having to literally open the patient's skull.Scientists from the University of California who are currently overseeing the research project have stated that it is a vital first step toward eliminating an invasive brain surgery procedure called a craniectomy, during which part of the skull is removed. The alternative procedure would involve allowing a laser to travel through a tiny “window” created in the skull to complete certain surgeries.

Additionally, laser treatment may entirely eliminate the use of scalpels in the future, similar to how other procedures, such as lobotomies and bloodletting, have become obsolete and elbowed aside by newer, healthier and more efficient procedures.

Cell Evaluation and Regeneration of New Cells

Laser technology is also useful in the regeneration of new tissues and cells. Professor Woo, from the Wake Forest University School of Medicine, uses lasers to create supporting structures that hold tissue implants in place. The laser is used somewhat like a drill, designing holes in the supporting structure that ultimately guide new cells in the appropriate direction as they mature.Lasers may also help medical engineers design small devices to reduce pain and injury at injection sites when compared with conventional techniques.

Lasers are highly advantageous as diagnostic tools as well, as they allow non invasive probing of live tissue.

Safety

Despite the exciting possibilities, the safety of laser treatment must be evaluated further. For instance, light and heat exposure can result in tissue damage and burns, and therefore laser use must be carefully monitored.One of the biggest obstacles in getting lasers past medical regulatory boards is general concerns about radiation exposure. Fortunately, technological advancements concerning lasers and their therapeutic use have reduced the amount of light to which tissues are exposed during laser surgery. A device approved by the FDA in 2010, called a femtosecond laser, was one of the first instruments designed specifically to lessen tissue exposure.

Ultimately, studies involving this non invasive treatment are likely to continue as the 21st Century progresses. Hopefully, such research will lead to better and more efficient treatments and procedures in the future.

Top Image by Andrea Pacelli

| By | Embed |

After graduating from medical school at the University of Michigan, Isaac started his own private orthopedic practice in Riverton Utah. Having dealt with and overcome many of the obstacles that come with entrepreneurship and small business ownership, Isaac has found a passion for helping the up and coming generation thrive in their careers and ultimately their lives. | |