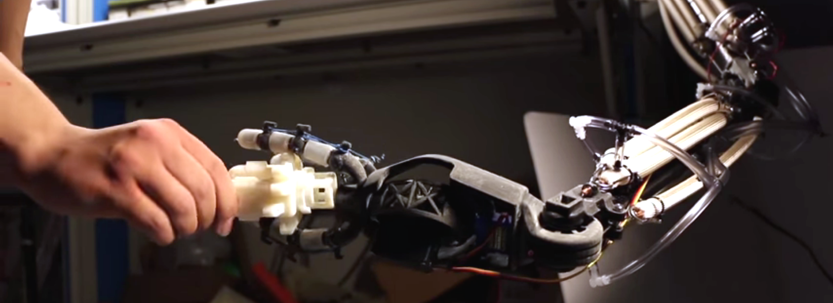

Robotics

Robots made entirely out of soft materials could be real game-changers. They could integrate more easily with human activities ranging from the ordinary to the exceptional. A group of engineers at Carnegie Mellon University is working to make such soft robots a hard reality. |

SOURCE NOVA PBS

| By 33rd Square | Embed |